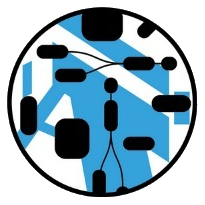

pdf-indexer

| Version: |

org.nasdanika.launcher.demo@2025.6.0

|

|---|

Options

The size of the dynamic list for the nearest neighbors (used during the search). Higher ef leads to more accurate but slower search. The value ef of can be anything between k (number of items to return from search) and the size of the dataset.

The option has the same meaning as --hnsw-ef, but controls the index time / index precision. Bigger ef-construction leads to longer construction, but better index quality. At some point, increasing ef-construction does not improve the quality of the index. One way to check if the selection of ef-construction was ok is to measure a recall for M nearest neighbor search when ef = ef-construction: if the recall is lower than 0.9, then there is room for improvement.

Sets the number of bi-directional links created for every new element during construction. Reasonable range for m is 2-100. Higher m work better on datasets with high intrinsic dimensionality and/or high recall, while low m work better for datasets with low intrinsic dimensionality and/or low recalls. The parameter also determines the algorithm’s memory consumption. As an example for d = 4 random vectors optimal m for search is somewhere around 6, while for high dimensional datasets (word embeddings, good face descriptors), higher m are required (e.g. m = 48, 64) for optimal performance at high recall. The range m = 12-48 is ok for the most of the use cases. When m is changed one has to update the other parameters. Nonetheless, ef and efConstruction parameters can be roughly estimated by assuming that m efConstruction is a constant1.

Nasdanika Demos

Nasdanika Demos